Big O is one of the most important instruments for the analysis of an algorithm by computer scientists. For software developers, it is also a good idea to learn in detail. Find below an explanation about the Big O Notation. This article is written on the basis that a code has already been addressed. In addition, some in-depth material requires high-school maths, making complete beginners uncomfortable. But let’s get started if you’re ready!

We will be discussing the large O notation in detail in this article. We begin with an algorithm for an example to open our minds. Then we go to mathematics a bit to understand it formally. Then we will discuss some traditional Big O notation variations. Finally, in a realistic situation, we will explore some of the limits of Big O. Below is a table of contents

Table of Contents

What Exactly is Big O Notation, and Why is it Important?

“Big O” is a mathematical notation for a function’s limiting behavior when the argument is oriented to a specific value or infinity. He is part of an inventory family, called Bachmann–Landau Notation or asymptotic Notation, created by Paul Bachmann, Edmund Landau, and other members of the public.”

Big O explains in plain language the difficulty of the algebraic terms of your code.

To find out what Big O is, we can examine a typical example, O(n²), which is normally declared as “Big O squared.” The input size in the letter “n” and “g(n) = n²,” in the O() equation, gives a sense of the complexity of the input size algorithm.

The selection sort algorithm will be a standard algorithm with the complexity of O(n²). Selection sort is an algorithm for sorting, which traverses the list to ensure that any item in index I is the smallest/largest item of the list. This is shown by the codepen below.

The following code can be used to define the algorithm. This algorithm first iterates through the list with a for loop, to make sure that this is the smallest element in the list. Then, in the remaining part of the list for each variable, it uses another for the loop.

SelectionSort(List) {

for(i from 0 to List.Length) {

SmallestElement = List[i]

for(j from i to List.Length) {

if(SmallestElement > List[j]) {

SmallestElement = List[j]

}

}

Swap(List[i], SmallestElement)

}

}

In this situation, the variable list is considered the input, so the input dimensions n are the number of items in the list. Suppose the declaration and the value assignment are limited to the if argument takes constant time. Then, by looking at how many times statements are executed, we can see the large O notation for the Selection Sort function.

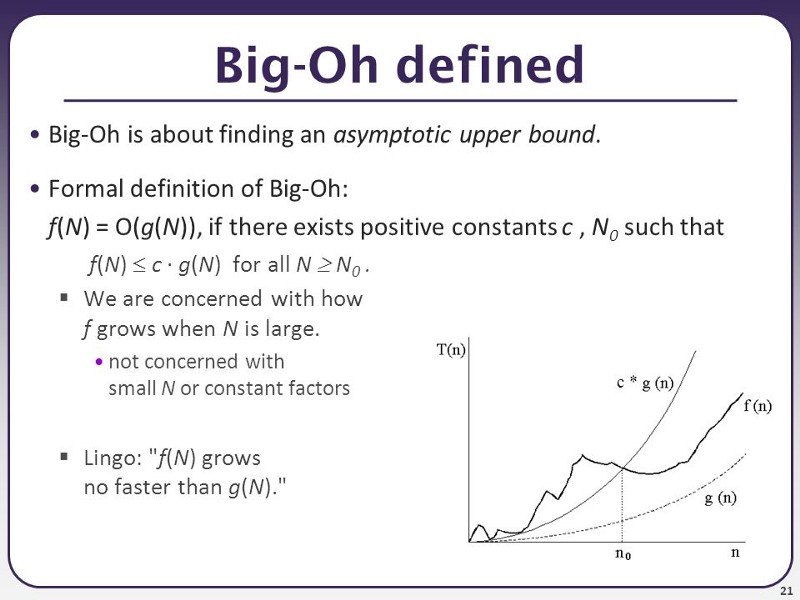

Formal Definition of Big O Notation

There was once an Indian king who wished a wise man to reward his excellence. The wise man called only for wheat to fill a chessboard. But he wanted 1 grain of wheat on the first tile, then 2 on the second tile, then 4 on the next… But here are his rules.

Per the chessboard, the tile had to be replenished twice as much as the previous one by the amount of grain. The naive King did not hesitate, believing that it would be a trivial demand to satisfy, until he did…

Then, how many wheat grains does the king owe to the wise man? We know that there are 8 squares of a chessboard of 8 squares, that is, 64 tiles, so 2⁶⁴ grains of wheat should be on the final tile! You get 1.8446744 * 10¹⁹, which’s around 18, followed by 18 zeroes when you do a calculation online. Assumed to be 0.01 grams per grain of wheat, this is 184,467,440,737 tonnes. And there’s a lot of 184 billion tonnes, isn’t it?

For exponential growth, do they not rise very quickly later? Computer algorithms have the same logic. If the efforts needed to perform a task increase exponentially in terms of input size, they can finally become very high.

Now 64 is 4096 square. When you add this number to 2⁶⁴, it is lost beyond the important numbers. So we just think about the prevailing terms when we look at the growth rate. And since we want to evaluate the growth of the input size, there is no useful information in the coefficients, which multiply the number instead of increasing the input size.

Interesting Blog:- JavaScript forEach – How to Loop Through an Array in JS

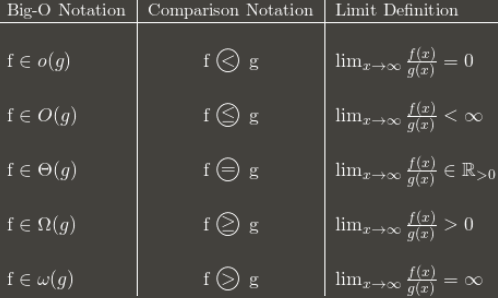

Big O, Little O, Omega & Theta

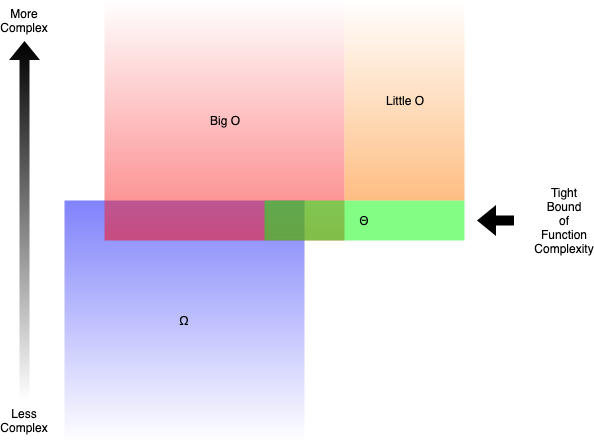

- The upper bound of complexity is defined by Big O (O()).

- The lower bound of complexity is defined by Omega (Ω()).

- Theta (Θ()) expresses the complexity’s exact bound.

- The upper bound, except the exact bound, is defined by little O (o()).

For example, the function g(n) = n² + 3n is O(n³), o(n⁴), Θ(n²) and Ω(n). But you would still be right if you say it is Ω(n²) or O(n²).

Generally speaking, what we said is Theta when we talk about Big O. It is quite useless if you have a high limit that is far bigger than the analytical scope. This is like fixing inequality by putting ∞ it on the broader side, which will almost always correct you.

But how do we evaluate the more complex functions than other functions? We will learn this in-depth in the next part.

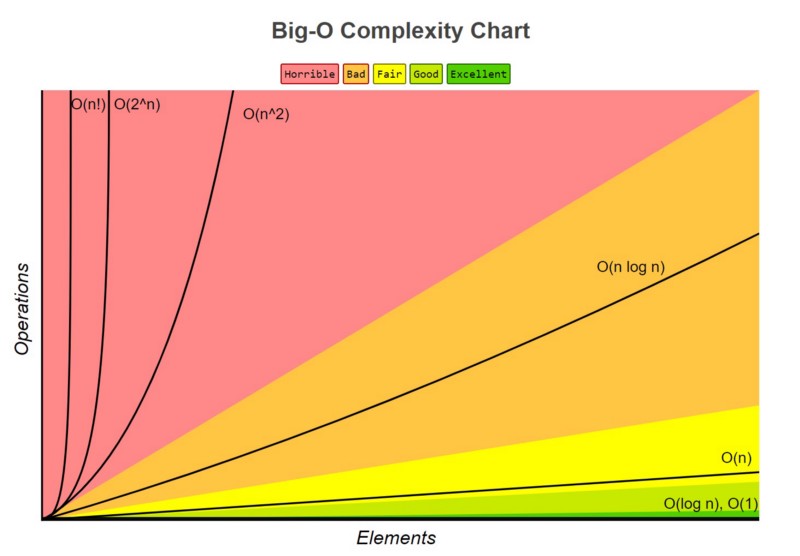

Complexity Comparison Between Typical Big Os

We just think of a dominant term when we try to find the Big O for a certain function g(n). The dominant term is the most rapidly growing term.

For example, if we have anything like g(n) = n² + 5n + 6 it is going to be big O(n²) that’s going to develop faster than n. This is analogous to the shortcut to finding limits of fractional polynomials when you have made some calculations before, where you are just at last concerned about the predominating word for numerators and denominators.

But which function grows faster than the others? There are quite a few rules.

1. The least complex is O(1)

If you can create an algorithm that solves the problem in O(1), also known as “constant time,” you’re probably at your best. When a scenario’s complexity reaches O(1), we can test it by comparing it to its O(1/g(n)) counterpart. O(1/n) is, for example, more difficult than O(1/n²).

2. O(log(n)) is more difficult than O(1), but less difficult than polynomials.

Since sorting algorithms are often associated with divide and conquer algorithms, O(log(n)) is a good complexity to aim for. Since the square root function can be called a polynomial with an exponent of 0.5, O(log(n)) is less complex than O(√n).

3. Polynomials get more complex as the exponent rises

For eg, O(n⁵) is more difficult than O(n⁴)). Because of their simplicity, we looked at a lot of examples of polynomials in the previous pages.

4. As long as the coefficients are positive multiples of n, exponentials are more complex than polynomials.

While O(2ⁿ) is more complicated than O(n⁹⁹), O(2ⁿ) is simpler than O(1). We use 2 as the basis for exponentials and logarithms since most items in Computer Science are binary, but exponents can be changed by changing the coefficients. If the basis for logarithms isn’t defined, it’s assumed to be 2.

5. Factorials are more difficult to understand than exponentials.

Look up the Gamma function, which is an analytic continuation of a factorial, if you’re curious about the rationale. The number of multiplications in both factorials and exponentials is the same, but the numbers that are multiplied in factorials rise while staying constant in exponentials.

6. Multiplying terms

While multiplying, the complexity will be greater than the original, but not greater than the equivalence of multiplying anything more complicated. Since O(n²) = O(n * n) and n is more complex than log(n), O(n * log(n)) is more complex than O(n) but less complex than O(n²).

You May Like:- Ternary Operator in C – Easy Explanation

Time and space Complexity

So far, we’ve just discussed the time complexity of the algorithms. That is, what we care about is how long the program takes to complete the mission. It’s also important to consider how long it takes the program to complete the mission. Since a program’s space complexity is related to how much memory it requires, it is an important factor to consider.

The space complexity functions in the same way as the time complexity does. Since it only stores one minimum value and its index for comparison, the selection sort has a space complexity of O(1), and the maximum space used does not increase with the input size.

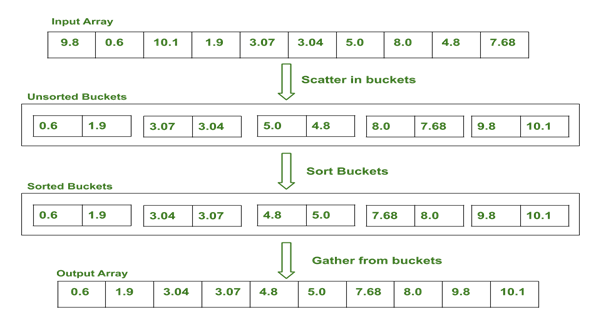

Bucket type, for example, has an O(n) space complexity but an O(n) time complexity (1). Bucket sort sorts the array by creating a sorted list of all the array’s possible elements, and then incrementing the count whenever an element is found. In the end, the sorted array will be made up of the sorted list elements repeated by their counts.

Best, Average, Worst, Expected Complexity

Best case, worst case, average case, and predicted case scenarios can all be used to assess uncertainty.

Take, for example, insertion type. Insertion sort goes through the list element by element. If the element is larger than the previous element, it is inserted backward until it is larger than the previous element. There will be no swap if the array is sorted at the start. The algorithm can only go through the list once, giving it an O time complexity (n). As a result, we can assume that the insertion sort’s best-case time complexity is O(n). O(n) complexity is also referred to as linear complexity.

Perhaps an algorithm is simply unlucky. If the elements are sorted in the opposite order, Fast Sort would have to go through the list in O(n) time, but on average it sorts the array in O(n * log(n)) time. In general, we look at an algorithm’s worst-case results when evaluating its time complexity. More on that, as well as a short form, will be covered in the following section as you read. The algorithm’s expected output is defined by the average-case complexity. Calculating the likelihood of each scenario is often necessary. Going into depth can be time-consuming, so it is not covered in this article. A cheat sheet on the time and space complexity of popular algorithms is given below.